Online sequential-decision making via bandit algorithms, modeling considerations for better decisions (Keynote Talk @ ALBECS-2024, 19th International Conference on Persuasive Technology 2024)

Abstract

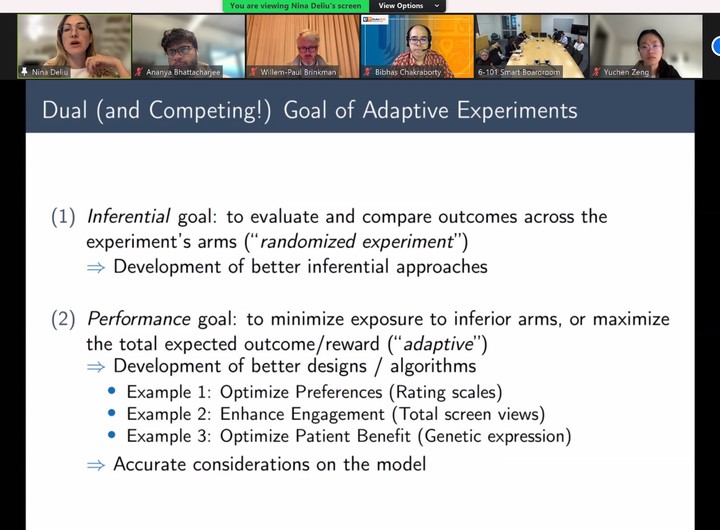

The multi-armed bandit (MAB) framework holds great promise for optimizing sequential decisions online as new data arise. For example, it could be used to design adaptive experiments that can result in better participant outcomes and improved statistical power at the end of the study. However, due to mathematical and computational aspects, most MAB variants have been developed and are implemented under binary or normal outcome models. In this talk, guided by three biomedical case studies we have designed, I will illustrate how traditional statistics can be integrated within this framework to enhance its potential. Specifically, I will focus on the most popular Bayesian MAB algorithm, Thompson sampling, and on two types of outcomes: (i) rating scales, increasingly common in recommendation systems, digital health and education, and (ii) zero-inflated data, characterizing mobile health experiments. Theoretical properties and empirical advantages in terms of balancing exploitation (outcome performance) and exploration (learning performance) will be presented. Further considerations will be provided in the unique and challenging case of (iii) small samples. These works are the result of collaborations with Sofia Villar (Cambridge University), Bibhas Chakraborty (NUS University) and the IAI Lab (Toronto University), among others.