Multinomial Thompson Sampling for Online Sequential Decision Making with Rating Scales (Invited Seminar @ Federico II di Napoli)

Abstract

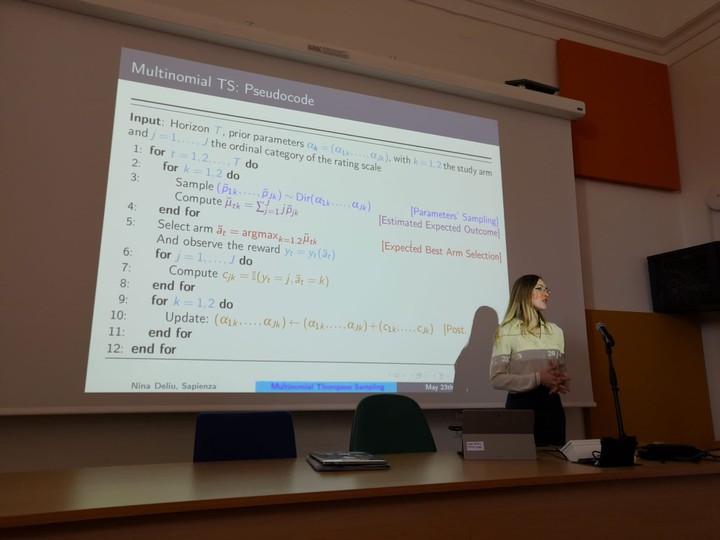

Multi-armed bandit algorithms such as Thompson sampling (TS) have been put forth for decades as useful tools for optimizing sequential decision-making in online experiments. By skewing the allocation ratio towards superior arms, they can minimize exposure to less promising arms and substantially improve participants’ outcomes. For example, they may use continuously collected ratings to enhance their overall experience with a program. However, most TS variants assume either a binary or a normal outcome model, leading to suboptimal performances in rating scale data. Motivated by our real-world behavioral experiment, we introduce Multinomial-TS to more accurately incorporate rating scale preferences and redesign the study. Considerations on the prior’s role are further incorporated in order to mitigate the incomplete learning phenomenon typical of this Bayesian bandit algorithm.